Full Stack NiiVue

Fullstack cloud deployment for medical imaging.

Goal

As demand grows for secure and efficient web-based processing, this project aims to provide a demo that helps developers implement and customize cloud providers and analysis tools for scalable, secure neuroimaging workflows. Usually processing steps are time-consuming and can’t realistically be run on the client side alone, building a full-stack app became the obvious path forward. That way, the heavy lifting could stay on the backend, while the browser focused on visualization and interaction.

This project also happened as part of Google Summer of Code (GSoC) 🌟, which was awesome to have mentorship and guidance.

Implementation

-

Browser-Native Visualization Niivue: Niivue enables GPU-accelerated rendering of medical images directly in the browser, supporting different medical imaging formats in a modular approach that helps developers easily implement it in their application.

-

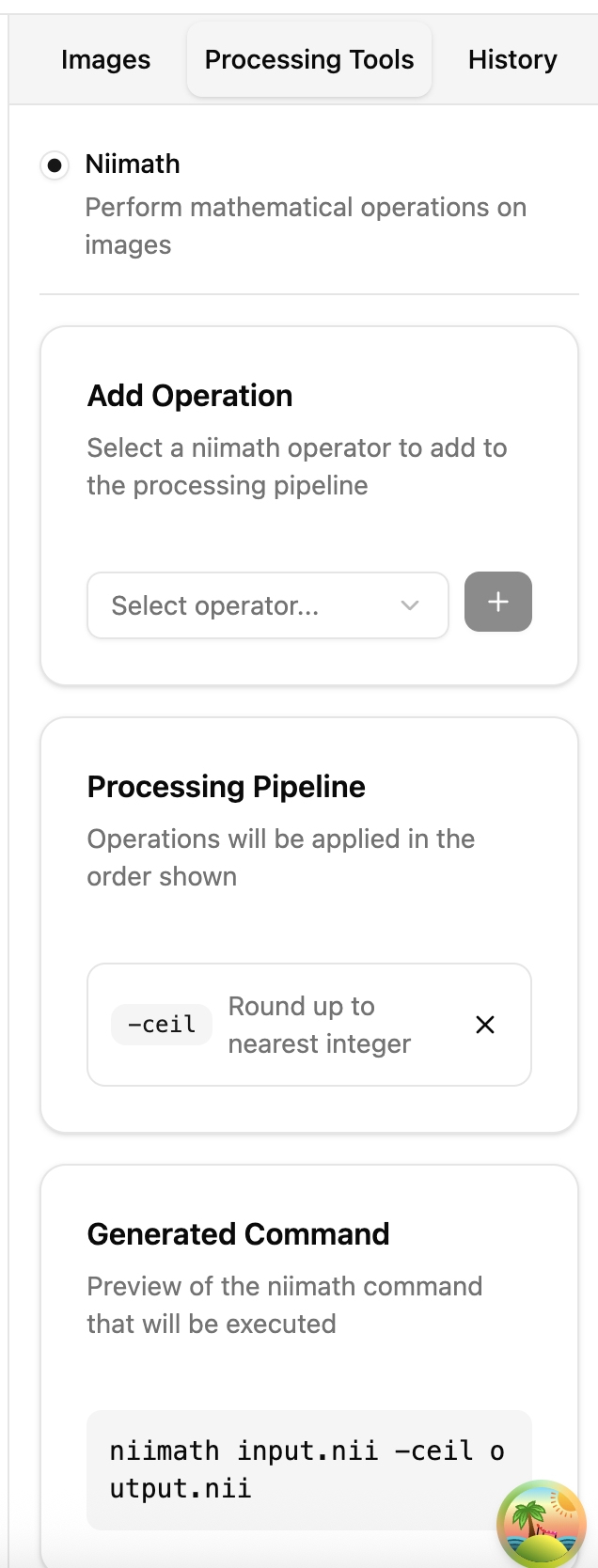

Modular Processing (NiiMath + Backend): A curated set of NiiMath operations is implemented, but the backend is designed for developers to add or swap in their own processing pipelines.

-

Secure Authentication WorkOS: Built-in auth ensures sensitive medical data is handled securely, with a modular interface that supports swapping to JWT, OAuth, or other auth providers.

-

Persistent Data Layer (SQLModel + PostgreSQL): Provides structured storage for users, images, and metadata, ready for extension into clinical or research workflows.

Check out the current work from here by following the setup instruction from the repo https://github.com/niivue/fullstack-niivue-demo

Contributions

My main contributions on the implementation are in the two PRs 💅🏻:

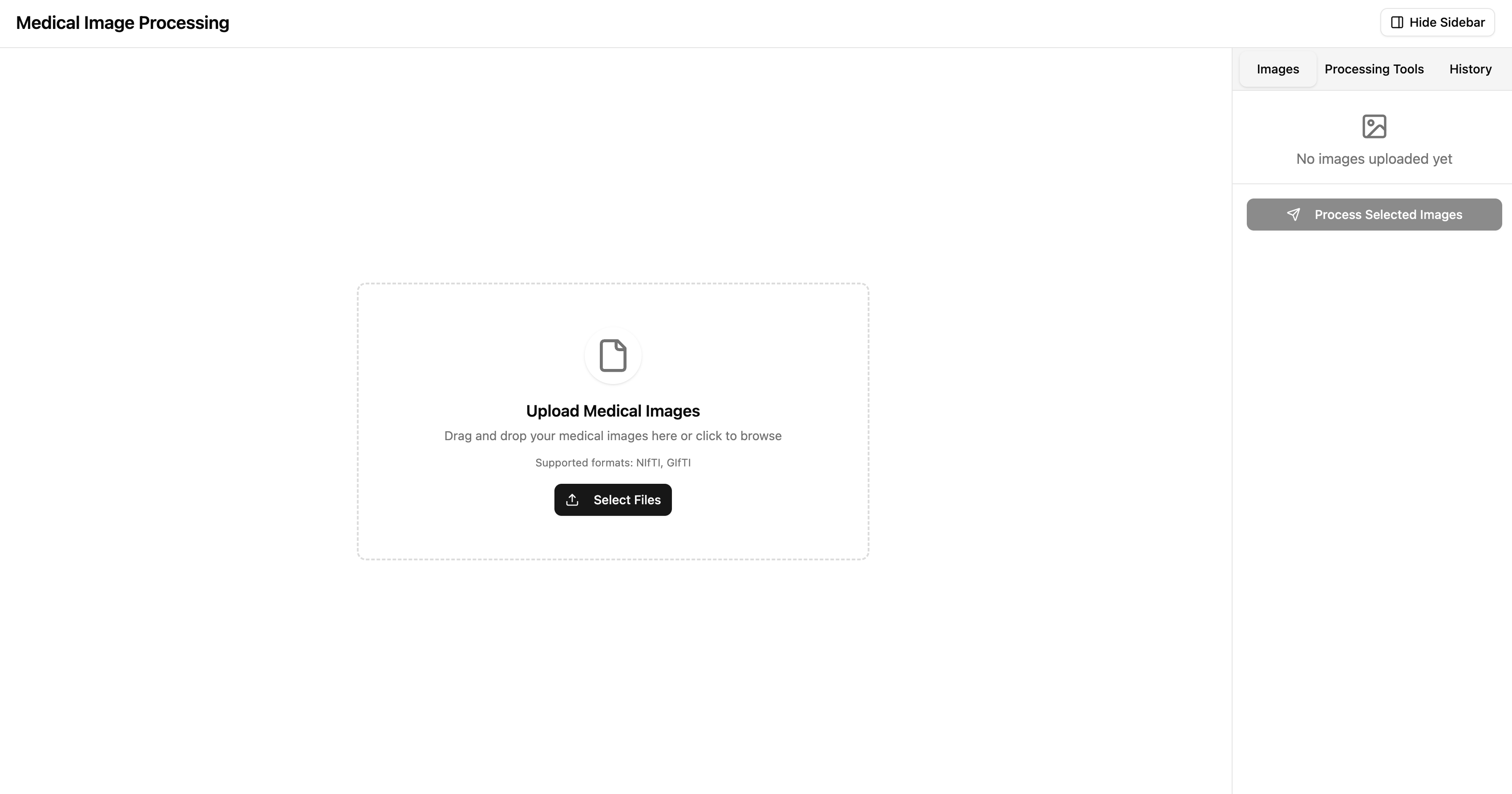

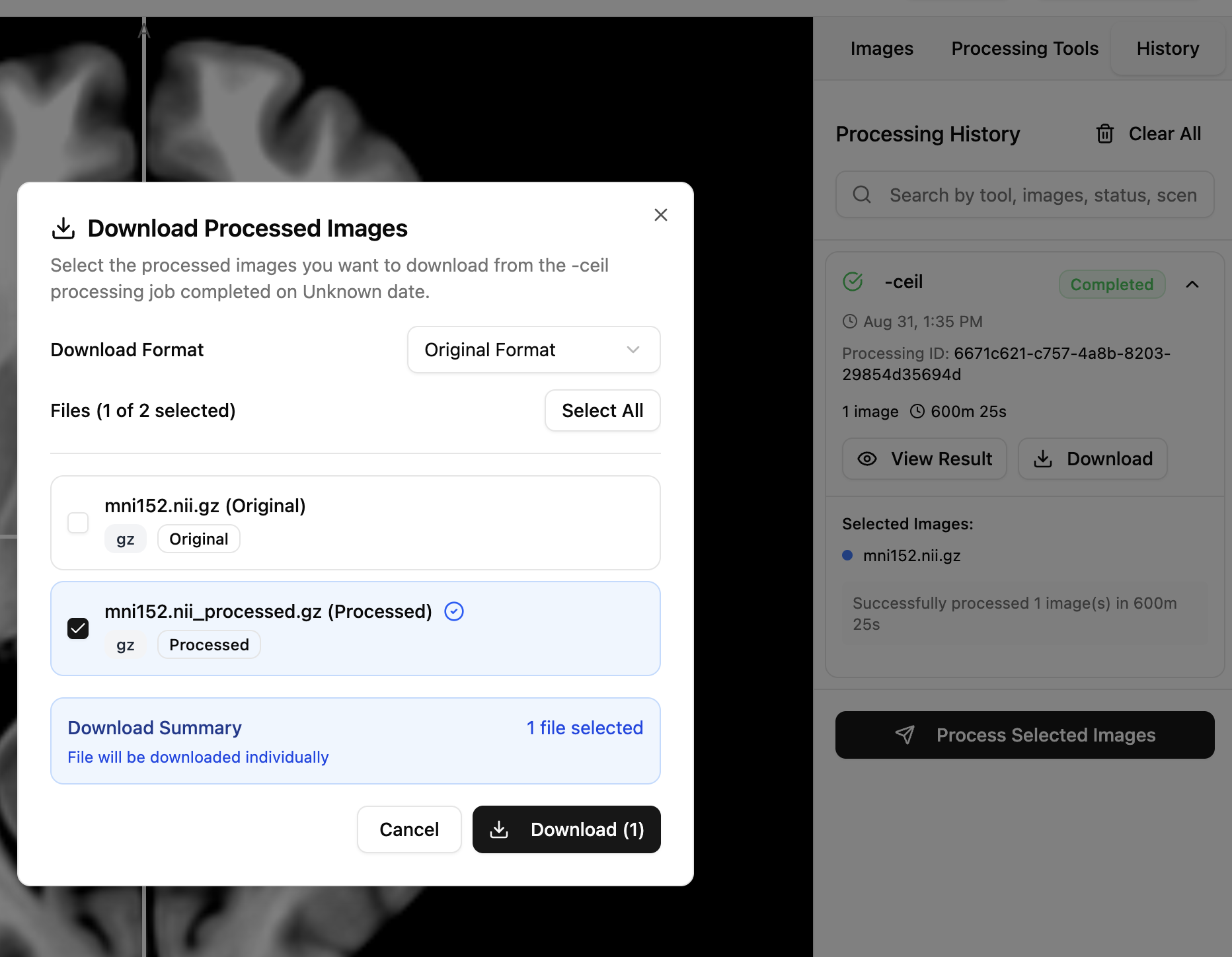

This is how the app looks like:

Scene - Upload Images

Scene - Select Processing Tool

Scene - View Results

Takeaways

User Interface

A big part of this project was making sure the user interface felt practical for people who actually work with neuroimaging data. Having Paul Wighton on board really helped here. The current implementation of the download feature is borrowed and inspired by Paul’s work — he highlighted that users should be able to download both the scene and the images straight from the browser. His guidance shaped the way we approached the UI, making it not just about visualization, but also about packaging results and keeping workflows portable.

On the technical side, Chris Rorden caught a tricky issue with the MGH format (used in FreeSurfer). The problem came down to data types — some files were loading in ways that consumed more memory than necessary. Chris optimized how Niivue handles MGH files so that when the format is detected, it automatically chooses more efficient data types when possible. That fix made a noticeable difference: it not only reduced memory usage but also improved performance when working with larger datasets in the browser.

Even though these weren’t my contributions, seeing how the mentors spotted these sneaky issues and made the tool more user-friendly and resource-efficient was really eye-opening. It showed me how paying attention to tiny details can have a big impact on the overall user experience and performance.

Store NiiVue’s NVDocument in database

One of the really nice parts of this project was how easy it was to slot NiiVue’s NVDocument straight into the database. Since Niivue designed it to be modular and flexible, it felt natural to store it as a JSON field in the SceneBase model:

class SceneBase(SQLModel):

timestamp: datetime = Field(default_factory=datetime.now)

nv_document: Dict[str, Any] = Field(sa_column=Column(JSON))

tool_name: str | None = Field(default=None, max_length=255)

status: ProcessingStatus = Field(default=ProcessingStatus.PENDING)

result: Dict[str, Any] | None = Field(default=None, sa_column=Column(JSON))

error: str | None = Field(default=None, max_length=255)

nv_document captured the Niivue scene including NVImage loaded into the canvas. Here’s what a stored nv_document might look like in practice:

{

"title": "some title",

"imageOptionsArray": [

{

"url": "data/ds00/T1.mgz",

"name": "T1.mgz",

"resultUrl": "data/ds00/T1_result.mgz"

}

]

}

The cool part is that Niivue made NVDocument super flexible. For example, inside imageOptionsArray, you can tack on fields like colormap or customData without breaking anything:

{

"title": "custom view",

"imageOptionsArray": [

{

"url": "data/ds01/brain.nii.gz",

"name": "brain.nii.gz",

"opacity": 0.8,

"colormap": "hot",

"customData": "processed with threshold=0.5"

}

]

}

Because we stored it as JSON, the backend doesn’t need to know ahead of time what all the possible fields will be so Niivue can just keeps adding new features, and we can support them right away without touching the schema.

What made this even better was how quickly the Niivue team integrated feedback. Whenever a feature gap came up in this project (like storing extra rendering options or linking result data), they were fast to adjust the spec or add hooks so it just worked. This project benefited from a tool that was evolving alongside developer needs. Particularly, thanks to Chris Drake’s PR on sparse document option, it made working with Niivue way more lightweight and easier to store.

Authentication

JupyterHub

Before adopting WorkOS for authentication, we initially explored JupyterHub Authenticator as the authentication backbone. While JupyterHub is a mature and powerful platform for managing users and computational environments, its integration into this project revealed several challenges:

-

Frontend Integration via Jinja Templates

-

JupyterHub authentication flows are rendered using Jinja templates, which required the frontend application to be bundled and served through the template system.

-

This limited flexibility compared to a decoupled frontend-backend architecture, as static assets and dynamic pages had to be aligned with JupyterHub’s templating system.

-

-

Complex Configuration Surface

-

JupyterHub offers a wide range of configuration options (spawners, authenticators, proxy settings, role management), which is powerful but also overwhelming when the goal is to set up a minimal, extensible authentication layer.

-

The abundance of options created a steep learning curve, requiring careful tuning of YAML configs, deployment scripts, and cloud connectors.

-

Despite these challenges, JupyterHub remains an attractive option because it integrates naturally with existing servers and cloud providers, offering pluggable authenticators for OAuth, GitHub, Google, and institutional SSO systems. It provides robust security mechanisms out of the box, including role-based access and integration with Kubernetes and cloud infrastructures.

WorkOS

However, for the interest of time, I couldn’t figure out the mechanism to get access token from JupyterhHub to validate with the local database. So I moved forward with WorkOS, which was recommended by Taylor Hanayik, one of my mentors, for a balance of simplicity and security that was missing in previous attempts with JupyterHub.

I chose authentication with password. Once authenticated, the system retrieves a sealed session backed by a refresh token. The session lifetime was configured to 5 hours (adjustable from the WorkOS dashboard), offering a practical balance: long enough to avoid frequent reauthentication during active use, but short enough to mitigate risks if a session is compromised. Developers can also modify to integrate other OAuth or SSO methods if needed.

Future work

- Cloud Deployment with Terraform: Using Terraform, we can automate deployment to the cloud, managing infrastructure as code for scalability and reproducibility. This will make it easier to spin up full-stack environments for testing, collaboration, or production use.

- Job Queueing with Redis: this to ensure tasks are handled reliably, avoid dropped jobs, and allow the server to remain responsive for other users.

- Multiprocessing with Celery: to improve the resource usage for efficiency.

- Testing: user authentication, NVDocument processing, and scene result handling